Apache Kafka: Beginner's Guide

One morning, as I was booking a cab to my office, I noticed something fascinating: the driver’s location on the app was updating in the real time. The car was moving smoothly, and I could see where my assigned driver was at the moment. This made me wonder - how does this happen? What kind of engineering happens behind the scenes which ensures real time updates? Let us dive deeper.

The Naive Approach: Database Driven

One straightforward solution to tackle this problem would be to use database. Here’s how it might work:

The driver’s app sends their location (latitude and longitude) to the sever.

The server writes this data to the database.

The user’s (passenger) app queries the database periodically, let us say every 1 second to fetch the latest location of driver.

While this approach seems to work, but it has several drawbacks:

The Problem with Traditional Databases

1. Single Point of Failure

In a traditional database setup, if the database server goes down, the entire system becomes unavailable. Even with replication, failover mechanisms can introduce latency and complexity.

Example: Imagine an Uber-like app where the database storing driver locations crashes. Passengers would no longer see real-time updates, leading to a poor user experience.

2. High IOPS and Latency

Databases are designed for structured data storage and retrieval, not for handling millions of real-time events. As the number of writes and reads increases, the database can become a bottleneck, leading to high latency and reduced throughput.

Example: If thousands of drivers are sending location updates every few seconds, the database might struggle to keep up, causing delays in updating passenger apps.

3. Limited Scalability

Scaling a traditional database horizontally (adding more servers) is challenging. Sharding and replication can help, but they add complexity and may not fully solve the problem.

Example: If Uber expands to a new city with thousands of new drivers, the database might require significant reconfiguration to handle the increased load.

Better Solution: Enter Event-Driven Architecture

To address the above challenges, modern systems use event-driven architecture. Instead of relying on a database for real-time updates, we treat each location update as an event. These events are produced, and consumed in real time, enabling low latency, high throughput systems.

What is Event Driven Architecture?

Event driven architecture is design paradigm that enables systems to detect, process and respond to events in real time. An event is a significant change in state or an update that occurs within a system. It is a powerful approach for building scalable, responsive , and loosely coupled systems.

This is where our MVP of this blog - Apache Kafka comes into play.

What is Apache Kafka?

Apache Kafka is a distributed event streaming platform designed to handle high volumes of data in real time. It acts as a messaging system that allows producers to publish events and consumers to subscribe to them. Kafka is highly scalable, fault-tolerant, and capable of processing millions of events per second.

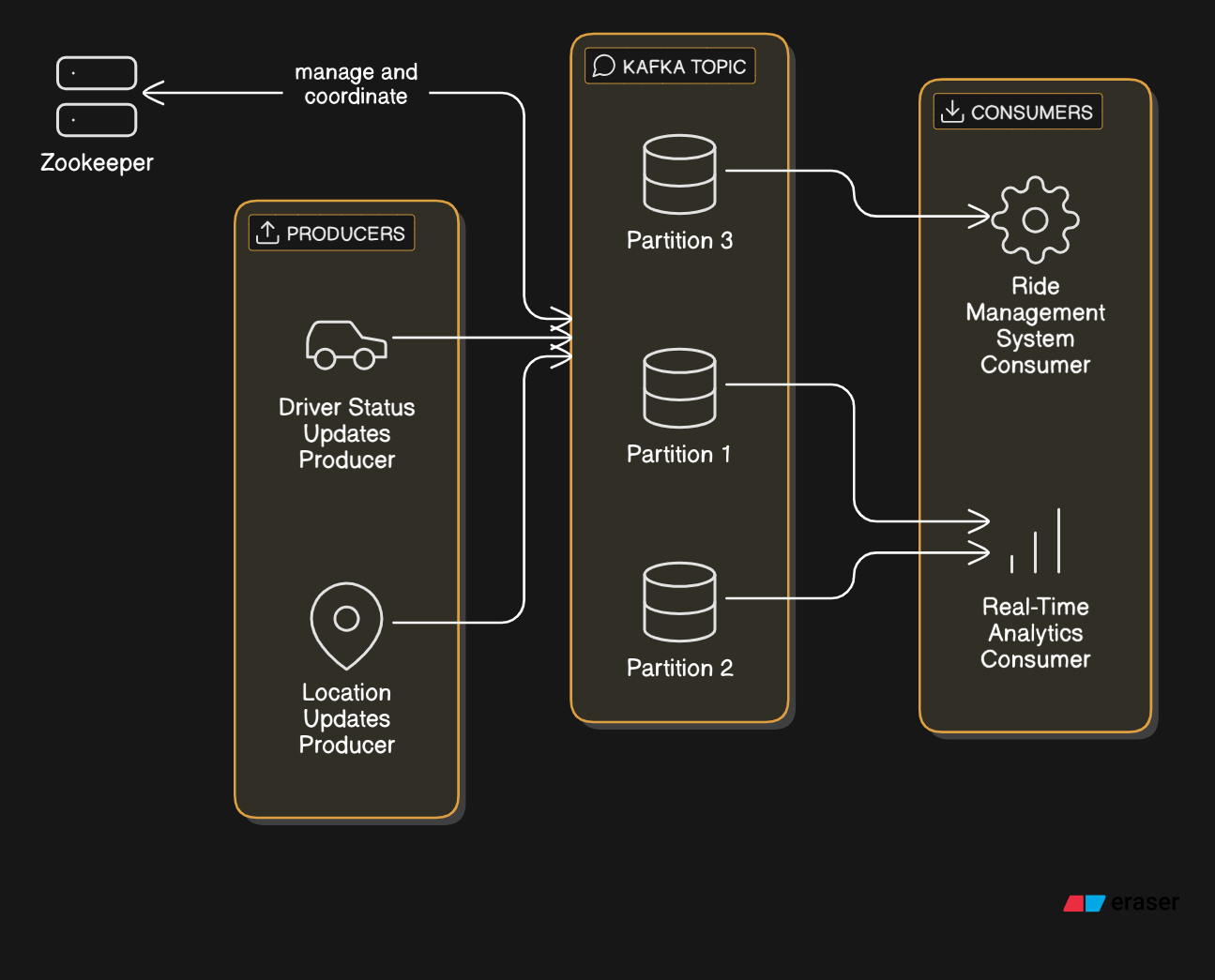

Kafka Architecture: Key Components

Producer: The application that sends events (location/status) updates to Kafka. In our case, the driver’s app acts as the producer, sending location updates.

Topic: A category or feed or channel to which events are sent. For example, we could have a topic called driver-locations.

Broker: A Kafka server that stores and manages topics. A kafka cluster consists of multiple brokers for scalability and fault tolerance.

Consumer: The application that consumes events from Kafka server. Here, the passenger’s app is the consumer, fetching location updates.

Partition: Topics are divided into partitions that enable parallel processing. Each partition is ordered, immutable sequence of events.

Zookeeper: It provides a centralized repository for configuration information, naming, synchronization, and group services. In simpler terms, Zookeeper acts as the "brain" of distributed systems, ensuring that all components work together seamlessly.

How Kafka Solves the Real-Time Location Tracking Problem

Let’s us understand using example:

Producing Events: The driver’s app sends location updates (e.g.,

{driver_id: 123, latitude: 12.34, longitude: 56.78}) to thedriver-locationstopic.Storing Events: Kafka brokers store these events in partitions. Since partitions are distributed across multiple brokers, the system can handle high throughput.

Consuming Events: The passenger’s app subscribes to the

driver-locationstopic and receives real-time updates. Kafka ensures that events are delivered in the order they were produced.

Benefits of Using Kafka

Distributed Architecture

Kafka is a distributed system, meaning it runs on multiple servers (brokers) that work together as a cluster. This eliminates single points of failure.

How It Works:

A Kafka cluster consists of multiple brokers.

Each broker stores a subset of the data (partitions) and can handle requests independently.

If one broker goes down, the others continue to operate, ensuring the system remains available.

Example: In our Uber-like app, even if one Kafka broker fails, the other brokers in the cluster can continue to process location updates, ensuring passengers still see real-time driver locations.

Data Replication

Kafka replicates data across multiple brokers to ensure fault tolerance. Each partition has multiple replicas, and one broker is designated as the leader for that partition.

How It Works:

When a producer sends an event, it is written to the leader partition and replicated to follower partitions.

If the leader broker fails, one of the followers automatically becomes the new leader, ensuring no data loss or downtime.

Example: If a Kafka broker storing driver location updates fails, another broker with a replica of the data takes over, and the system continues to function seamlessly.

. High Throughput and Low Latency

Kafka is optimized for high-throughput, low-latency event streaming. It achieves this through:

Partitioning: Topics are divided into partitions, allowing parallel processing of events.

Efficient Storage: Kafka uses an append-only log structure, which is highly efficient for write-heavy workloads.

Example: In our Uber-like app, Kafka can handle millions of location updates per second, ensuring passengers see smooth, real-time updates even during peak hours.

Durability and Data Retention

Kafka is designed for durability. Events are stored on disk and replicated across multiple brokers, ensuring data is not lost even in the event of hardware failures.

How It Works:

Kafka retains events for a configurable period (e.g., 7 days, 30 days).

Consumers can replay events from the past, making Kafka suitable for use cases like analytics and auditing.

Example: If a passenger wants to review their trip history, Kafka can provide the location data for the entire trip, even if it happened days ago.

Conclusion

Real-time location tracking is a fascinating problem that showcases the power of event-driven architecture. By using Apache Kafka, we can build scalable, low-latency systems that handle millions of events per second. Whether it’s tracking a cab on a map or monitoring IoT devices, Kafka provides a robust solution for real-time data processing. Next time you see that little car icon moving on your app, you’ll know the incredible engineering that makes it possible.

I have built a small prototype for this using node js. Github Link